Hi there👋, welcome to my little world again! As always, it is a joy to see you around and I am glad that you are here to learn about scraping today using Selenium & Pandas.

I was inspired to write this tutorial because I am participating in Google Solution Challenge 2022 where we are required to use some of the Google products.

🔹 MpaMpe

With that, let me introduce you to MpaMpe, an online hybrid web crowdfunding app that is tailored for Ugandans. This platform will accept both crypto and fiat currency, visit the MVP here.

Read More about Google Solution Challenge here;

Read More about Google Solution Challenge here;

I wanted to use Scrapy and Beautiful Soup in conjunction with requests but I later realised I can achieve all that with just Selenium and that's why I chose it.

So to get started about Selenium, read my Selenium article here and learn how to install the specific drivers.

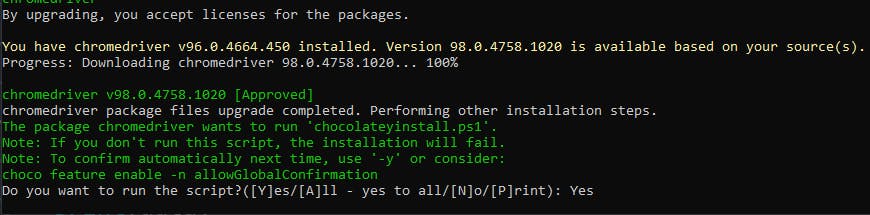

In my case, the chrome driver was outdated and I had to update it with choco upgrade chromedriver in Windows.

🔹 Challenge

Most websites are straightforward to scrape but if a website that uses maybe react or angular lie google developer site, it becomes hard to scrape and it involves a few hacks and tricks to get the day

And when they include pagination, it becomes trickier but since I love scraping, I had to take it on because I wanted to find out the different and various services Google has for developers and get a quick summary of them in one file.

And when they include pagination, it becomes trickier but since I love scraping, I had to take it on because I wanted to find out the different and various services Google has for developers and get a quick summary of them in one file.

#Ad

ScraperAPI is a web scraping API tool that works perfectly with Python, Node, Ruby, and other popular programming languages.

Scraper API handles billions of API requests that are related to web scraping and if you used this link to get a product from them, I earn something.

So let's get started by importing our dependencies in a file;

🔹 The Code

from selenium import webdriver

import time

from selenium.webdriver.common.by import By

import pandas as pd

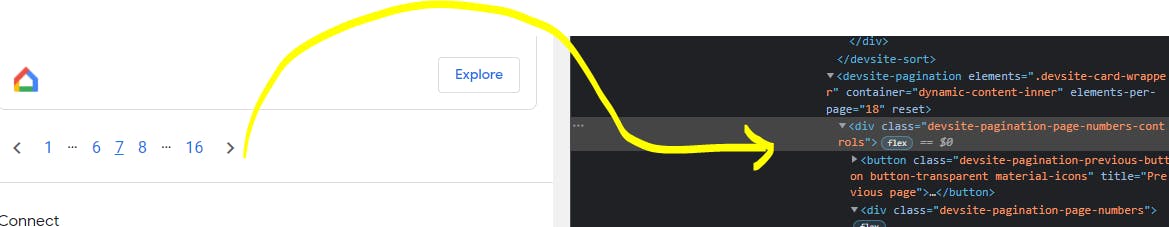

So as you click on the > button , our target URL does not change or tell us which page it is from the pagination breadcrumbs.

# Our target URL

url = "https://developers.google.com/products/"

# Initialise the driver

driver = webdriver.Chrome()

We are going to create re-usable code (functions) to cater for the waiting time and when we should sleep in querying the DOM as it loads dynamically.

def web_wait_time():

return driver.implicitly_wait(10)

def web_sleep_time():

return time.sleep(10)

I am using self-descriptive naming and you should be able to understand what I am doing on top of the comments.

# Wait website loading

web_wait_time()

# We can do our get request

driver.get(url)

# We can dump scraped data as lists for later use.

titles = []

summaries = []

Let's proceed to create the main function of our script to query the data we are actually searching for.

def all_products():

"""This is the main function that inspects and located the DOM that loads dynamically

to get the title & summary of the product.

You can inspect the DOM with dev tools.

"""

# From Chrome Inspection, this is our main content container

data = driver.find_elements(By.CLASS_NAME, "devsite-card-content")

# We loop through to get the subsets or children elements

# where our data resides

for data_elements in data:

# Title & Summary Locators

data_title = data_elements.find_elements(By.TAG_NAME,"h3")

data_summary = data_elements.find_elements(By.CLASS_NAME,"devsite-card-summary")

for title in data_title:

titles.append(title.text)

# print(title.text)

for summary in data_summary:

summaries.append(summary.text)

# print(summary.text)

We allow data to load

# Lets wait again to load -implicitly

web_wait_time()

# Lets Allow the DOM to load first before we start

# getting the next dynamic pages.

web_sleep_time()

Our next code is also very important because it handles data and I had to get the number of pages to loop through.

The click() method helps navigate through the dynamic pages.

for page in range(1,17):

driver.find_element(By.LINK_TEXT, str(page)).click()

all_products()

web_sleep_time()

Now, the data we have has empty strings or null data that was scraped from empty link texts.

So let's handle that with the powerful list comprehensions. Read about them in my article here

cleaned_titles = [data for data in titles if data != ""]

cleaned_summaries = [data for data in summaries if data != ""]

The main reason for all this is finally here, we need to save this scraped data somewhere in a file.

products_dataframe = pd.DataFrame(list(zip(cleaned_titles, cleaned_summaries)), columns =['Product', 'Summary'])

products_dataframe.to_csv('Google_Products.csv', sep='\t')

I created a data frame from zipping two lists together to have meaningful columns.

So running that script will take some time but you should be able to get the csv file in the same root folder.

Yay 🚀 Over 280 Google services summarised.

Check GitHub Repo & Star it 🚀🚀

🔹 Wait

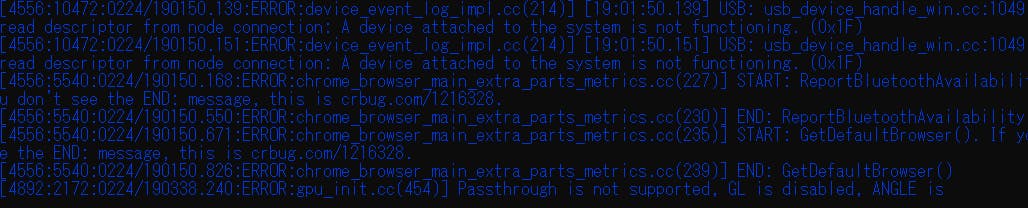

Depending on the OS environment and script dependency updates, you might also get these minor warnings or errors in the snip below

🔸 Fixing some of the minor errors:

📌 Pass-Through Error

📌 Windows OS Errors

Regardless of those warnings in the command line, you can add these few lines on top of our file or do a pip install pywin32 to have them cleared otherwise they are known minor bugs

# Fixing Minor Warnings Incase you experience any

from selenium.webdriver.chrome.options import Options

# Fixing some minor Chrome errors and Windows OS errors

options = Options()

options.add_experimental_option('excludeSwitches', ['enaable-logging'])

🔹 Summary

So the whole logic was to loop through every page, wait for content, scrape it and save temporarily and get the cumulated CSV file.

If you are a University Student who is also part of the Google Solution Challenge and have found this useful, please comment below If you are here and you also learnt something about scraping, please give a thumbs up.

You can watch Video Implementation here

🔹 Conclusion

Once again, hope you learned some automation & scraping today.

Please consider subscribing or following me for related content, especially about Tech, Python & General Programming.

You can show extra love by buying me a coffee to support this free content and I am also open to partnerships, technical writing roles, collaborations and Python-related training or roles.

📢 You can also follow me on Twitter : ♥ ♥ Waiting for you! 🙂

📢 You can also follow me on Twitter : ♥ ♥ Waiting for you! 🙂